#Sophisticated Algorithms and Large Datasets

Explore tagged Tumblr posts

Text

The Complexities of AI-Human Collaboration

Introduction

In recent years, artificial intelligence (AI) has been revolutionizing many facets of our lives, from improving our ability to make decisions to automating repetitive jobs. The cooperation of AI systems and people is one of the most important advances in this sector. Despite all of its potential, this alliance is not without its difficulties and complexity. The complexities of AI-human collaboration will be discussed in this blog post, along with its advantages, disadvantages, ethical implications, and prospects for peaceful cohabitation with machine intelligence.

I. The Potential for AI and Human Cooperation

1.1 Improved Judgment-Making

AI systems can process and analyze data faster and on a larger scale than humans can because of their sophisticated algorithms and large datasets. They can offer priceless insights in group settings, assisting others in making better judgments. This is especially true in industries like healthcare, where AI can help physicians diagnose more difficult-to-treat illnesses by examining patient information and medical imaging.

1.2 Efficiency and Automation

Automation powered by AI has the potential to optimize workflows in a variety of sectors, lowering labor costs and boosting output. For instance, in manufacturing, humans can concentrate on more sophisticated and creative aspects of the work while robots and AI-powered machinery perform repetitive and labor-intensive jobs with precision.

1.3 Customized Activities

Many internet services now come with AI-driven customization as standard functionality, from customized marketing campaigns to streaming platform recommendation algorithms. Businesses may increase user pleasure and engagement by working with AI to provide highly tailored experiences for their customers.

II. The Difficulties of AI-Human Coordination

2.1 Moral Conundrums

AI creates ethical concerns about privacy, data security, and justice as technology gets more and more ingrained in our daily lives. Ethical standards must be followed by collaborative AI systems to guarantee responsible data utilization, non-discrimination, and transparency. The difficulties in upholding moral AI-human cooperation are demonstrated by the Cambridge Analytica controversy and the ongoing discussions about algorithmic bias and data privacy.

2.2 Loss of Employment

There has been a lot of talk about the threat of automation leading to job displacement. Although AI can replace repetitive activities, it also begs the question of what a human's place in the workplace is. Businesses must carefully weigh the advantages of efficiency brought about by AI against the social and economic ramifications of job displacement.

2.3 Diminished Contextual Awareness

Despite their strength, AI systems frequently fail to comprehend the larger context of human relationships and emotions. This restriction may cause miscommunications, erroneous interpretations, and even harmful choices, particularly in delicate or emotional situations such as healthcare or customer service.

2.4 Accountability and Trust

For AI systems to be successfully integrated into a variety of disciplines, trust is essential. Establishing and preserving trust in AI calls for openness, responsibility, and resolving the possibility of biases and mistakes. Trust-related issues may make it more difficult for AI and people to work together seamlessly.

III. AI-Human Collaboration in Real-world Locations

3.1 Medical Care

Healthcare practitioners have benefited from the usage of AI-powered diagnostic systems, such as IBM's Watson, to help with disease diagnosis and therapy recommendations. AI and medical professionals working together may result in quicker and more precise diagnosis and treatment—possibly saving lives.

3.2 Self-Driving Cars

AI-human cooperation is essential to the development of self-driving cars. The AI system drives, but humans are needed for supervision, making decisions in difficult circumstances, and handling unforeseen circumstances. The goal of this collaboration is to increase traffic safety and lessen accidents.

3.3 Client Assistance

Chatbots and virtual assistants are widely used by organizations to respond to standard client inquiries. Artificial intelligence (AI) can effectively respond to routine inquiries, but human agents are on hand to handle trickier problems and add a personal touch, resulting in a flawless customer care experience.

3.4 Producing Content

AI is being used to create content, including music compositions, news articles, and even artwork. AI systems work with journalists and artists to discover new avenues for creativity. However, questions remain regarding the validity and uniqueness of information created by AI.

IV. Getting Past the Difficulties

4.1 Development of Ethics in AI

It is imperative that organizations and developers give ethical AI development top priority by integrating values like accountability, transparency, justice, and data privacy into their operations. Strong legal frameworks, moral standards, and continuous supervision can help achieve this.

4.2 Training using Human-AI

Promoting effective collaboration requires educating people about AI and its capabilities. Users can operate more productively with AI technologies by understanding the advantages and disadvantages of these systems with the aid of training programs.

4.3 Combo Positions

Fears of job displacement can be reduced by designing employment positions that combine human and AI activities. By utilizing AI's potential while retaining human supervision and knowledge, this strategy creates a hybrid workforce that benefits from the best aspects of both approaches.

4.4 Creating Collaborative Designs

AI-human collaboration should be considered while designing user interfaces and systems. This entails making certain that AI systems are simple to operate, offer understandable feedback, and blend in with current workflows.

V. AI-Human Collaboration's Future

5.1 Intelligent Augmentation

The idea of enhanced intelligence, in which AI systems complement human abilities rather than replace them, holds the key to the future of AI-human collaboration. AI will become a crucial tool to unlock human potential across a range of domains as it develops.

5.2 AI as an Adjunct Complement

AI will increasingly function as a human providing support, insight, and automating repetitive chores. This collaboration could increase productivity and efficiency in a variety of fields.

5.3 Frameworks for Ethics and Regulations

The establishment of moral and legal frameworks that uphold user rights and promote responsible AI use will be necessary for the growth of AI-human collaboration. These frameworks will aid in addressing the issues of algorithmic bias, accountability, and data privacy.

5.4 Ongoing Education

To properly interact, humans and AI systems will both need to constantly adapt and learn new things. While AI systems will need constant training to increase their comprehension of human context and emotions, humans will need to stay informed about AI capabilities and limitations.

In summary

There is no denying the complexity of AI-human collaboration; it raises issues with ethics, potential job displacement, and comprehension and trust. But by working together, AI and humans can create tailored experiences, increased decision-making, and automation, which makes this an interesting direction for the future. We can create the conditions for peaceful coexistence of human and machine intelligence by tackling these difficulties through ethical growth, training, and hybrid employment roles. This will open up a world of opportunities that will benefit people individually as well as society at large. Future AI-human cooperation has the potential to be a revolutionary force that ushers in a period of expanded human capabilities and augmented intelligence.

If all of my readers want to know more about Artificial intelligence at the current time please read This book to enhance your knowladge: https://amzn.to/3Sr5Tbo

#Pleasure and Engagement#Algorithmic Bias and Data Privacy.#AI-powered machinery#AI systems and people#AI-human collaboration#Sophisticated Algorithms and Large Datasets#Cambridge Analytica controversy#artificial intelligence#relationships#music#mindfulness#personal development#SEO#SMO#Digital Marketing

0 notes

Text

On generative AI

I've had 2 asks about this lately so I feel like it's time to post a clarifying statement.

I will not provide assistance related to using "generative artificial intelligence" ("genAI") [1] applications such as ChatGPT. This is because, for ethical and functional reasons, I am opposed to genAI.

I am opposed to genAI because its operators steal the work of people who create, including me. This complaint is usually associated with text-to-image (T2I) models, like Midjourney or Stable Diffusion, which generate "AI art". However, large language models (LLMs) do the same thing, just with text. ChatGPT was trained on a large research dataset known as the Common Crawl (Brown et al, 2020). For an unknown period ending at latest 29 August 2023, Tumblr did not discourage Common Crawl crawlers from scraping the website (Changes on Tumblr, 2023). Since I started writing on this blog circa 2014–2015 and have continued fairly consistently in the interim, that means the Common Crawl incorporates a significant quantity of my work. If it were being used for academic research, I wouldn't mind. If it were being used by another actual human being, I wouldn't mind, and if they cited me, I definitely wouldn't mind. But it's being ground into mush and extruded without credit by large corporations run by people like Sam Altman (see Hoskins, 2025) and Elon Musk (see Ingram, 2024) and the guy who ruined Google (Zitron, 2024), so I mind a great deal.

I am also opposed to genAI because of its excessive energy consumption and the lengths to which its operators go to ensure that energy is supplied. Individual cases include the off-grid power station which is currently poisoning Black people in Memphis, Tennessee (Kerr, 2025), so that Twitter's genAI application Grok can rant incoherently about "white genocide" (Steedman, 2025). More generally, as someone who would prefer to avoid getting killed for my food and water in a few decades' time, I am unpleasantly reminded of the study that found that bitcoin mining emissions alone could make runaway climate change impossible to prevent (Mora et al, 2018). GenAI is rapidly scaling up to produce similar amounts of emissions, with the same consequences, for the same reasons (Luccioni, 2024). [2]

It is theoretically possible to create genAI which doesn't steal and which doesn't destroy the planet. Nobody's going to do it, and if they do do it, no significant share of the userbase will migrate to it in the foreseeable future — same story as, again, bitcoin — but it's theoretically possible. However, I also advise against genAI for any application which requires facts, because it can't intentionally tell the truth. It can't intentionally do anything; it is a system for using a sophisticated algorithm to assemble words in plausibly coherent ways. Try asking it about the lore of a media property you're really into and see how long it takes to start spouting absolute crap. It also can't take correction; it literally cannot, it is unable — the way the neural network is trained means that simply inserting a factual correction, even with administrator access, is impossible even in principle.

GenAI can never "ascend" to intelligence; it's not a petri dish in which an artificial mind can grow; it doesn't contain any more of the stuff of consciousness than a spreadsheet. The fact that it seems like it really must know what it's saying means nothing. To its contemporaries, ELIZA seemed like that too (Weizenbaum, 1966).

The stuff which is my focus on this blog — untraining and more broadly AB/DL in general — is not inherently dangerous or sensitive, but it overlaps with stuff which, despite being possible to access and use in a safe manner, has the potential for great danger. This is heightened quite a bit given genAI's weaknesses around the truth. If you ask ChatGPT whether it's safe to down a whole bottle of castor oil, as long as you use the right words, even unintentionally, it will happily tell you to go ahead. If I endorse or recommend genAI applications for this kind of stuff, or assist with their use, I am encouraging my readers toward something I know to be unsafe. I will not be doing that. Future asks on the topic will go unanswered.

Notes

I use quote marks here because as far as I am concerned, both "generative artificial intelligence" and "genAI" are misleading labels adopted for branding purposes; in short, lies. GenAI programs aren't artificial intelligences because they don't think, and because they don't emulate thinking or incorporate human thinking; they're just a program for associating words in a mathematically sophisticated but deterministic way. "GenAI" is also a lie because it's intended to associate generative AI applications with artificial general intelligence (AGI), i.e., artificial beings that actually think, or pretend to as well as a human does. However, there is no alternative term at the moment, and I understand I look weird if I use quote marks throughout the piece, so I dispense with them after this point.

As a mid-to-low-income PC user I am also pissed off that GPUs are going to get worse and more expensive again, but that kind of pales in comparison to everything else.

References

Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., ... & Amodei, D. (2020, July 22). Language models are few-shot learners [version 4]. arXiv. doi: 10.48660/arXiv.2005.14165. Retrieved 25 May 2025.

Changes on Tumblr (2023, August 29). Tuesday, August 29th, 2023 [Text post]. Tumblr. Retrieved 25 May 2025.

Hoskins, P. (2025, January 8). ChatGPT creator denies sister's childhood rape claim. BBC News. Retrieved 25 May 2025.

Ingram, D. (2024, June 13). Elon Musk and SpaceX sued by former employees alleging sexual harassment and retaliation. NBC News. Retrieved 25 May 2025.

Kerr, D. (2025, April 25). Elon Musk's xAI accused of pollution over Memphis supercomputer. The Guardian. Retrieved 25 May 2025.

Luccioni, S. (2024, December 18). Generative AI and climate change are on a collision course. Wired. Retrieved 25 May 2025.

Mora, C., Rollins, R.L., Taladay, K., Kantar, M.B., Chock, M.K., ... & Franklin, E.C. (2018, October 29). Bitcoin emissions alone could push global warming above 2°C. Nature Climate Change, 8, 931–933. doi: 10.1038/s41558-018-0321-8. Retrieved 25 May 2025.

Steedman, E. (2025, May 25). For hours, chatbot Grok wanted to talk about a 'white genocide'. It gave a window into the pitfalls of AI. ABC News (Australian Broadcasting Corporation). Retrieved 25 May 2025.

Weizenbaum, J. (1966, January). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. doi: 10.1145/365153.365168. Retrieved 25 May 2025.

Zitron, E. (2024, April 23). The man who killed Google Search. Where's Your Ed At. Retrieved 25 May 2025.

10 notes

·

View notes

Text

AI is not a panacea. This assertion may seem counterintuitive in an era where artificial intelligence is heralded as the ultimate solution to myriad problems. However, the reality is far more nuanced and complex. AI, at its core, is a sophisticated algorithmic construct, a tapestry of neural networks and machine learning models, each with its own limitations and constraints.

The allure of AI lies in its ability to process vast datasets with speed and precision, uncovering patterns and insights that elude human cognition. Yet, this capability is not without its caveats. The architecture of AI systems, often built upon layers of deep learning frameworks, is inherently dependent on the quality and diversity of the input data. This dependency introduces a significant vulnerability: bias. When trained on skewed datasets, AI models can perpetuate and even exacerbate existing biases, leading to skewed outcomes that reflect the imperfections of their training data.

Moreover, AI’s decision-making process, often described as a “black box,” lacks transparency. The intricate web of weights and biases within a neural network is not easily interpretable, even by its creators. This opacity poses a challenge for accountability and trust, particularly in critical applications such as healthcare and autonomous vehicles, where understanding the rationale behind a decision is paramount.

The computational prowess of AI is also bounded by its reliance on hardware. The exponential growth of model sizes, exemplified by transformer architectures like GPT, demands immense computational resources. This requirement not only limits accessibility but also raises concerns about sustainability and energy consumption. The carbon footprint of training large-scale AI models is non-trivial, challenging the narrative of AI as an inherently progressive technology.

Furthermore, AI’s efficacy is context-dependent. While it excels in environments with well-defined parameters and abundant data, its performance degrades in dynamic, uncertain settings. The rigidity of algorithmic logic struggles to adapt to the fluidity of real-world scenarios, where variables are in constant flux and exceptions are the norm rather than the exception.

In conclusion, AI is a powerful tool, but it is not a magic bullet. It is a complex, multifaceted technology that requires careful consideration and responsible deployment. The promise of AI lies not in its ability to solve every problem, but in its potential to augment human capabilities and drive innovation, provided we remain vigilant to its limitations and mindful of its impact.

#apologia#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

3 notes

·

View notes

Text

Simulated universe previews panoramas from NASA's Roman Telescope

Astronomers have released a set of more than a million simulated images showcasing the cosmos as NASA's upcoming Nancy Grace Roman Space Telescope will see it. This preview will help scientists explore Roman's myriad science goals.

"We used a supercomputer to create a synthetic universe and simulated billions of years of evolution, tracing every photon's path all the way from each cosmic object to Roman's detectors," said Michael Troxel, an associate professor of physics at Duke University in Durham, North Carolina, who led the simulation campaign. "This is the largest, deepest, most realistic synthetic survey of a mock universe available today."

The project, called OpenUniverse, relied on the now-retired Theta supercomputer at the DOE's (Department of Energy's) Argonne National Laboratory in Illinois. In just nine days, the supercomputer accomplished a process that would take over 6,000 years on a typical computer.

In addition to Roman, the 400-terabyte dataset will also preview observations from the Vera C. Rubin Observatory, and approximate simulations from ESA's (the European Space Agency's) Euclid mission, which has NASA contributions. The Roman data is available now here, and the Rubin and Euclid data will soon follow.

The team used the most sophisticated modeling of the universe's underlying physics available and fed in information from existing galaxy catalogs and the performance of the telescopes' instruments. The resulting simulated images span 70 square degrees, equivalent to an area of sky covered by more than 300 full moons. In addition to covering a broad area, it also covers a large span of time—more than 12 billion years.

The project's immense space-time coverage shows scientists how the telescopes will help them explore some of the biggest cosmic mysteries. They will be able to study how dark energy (the mysterious force thought to be accelerating the universe's expansion) and dark matter (invisible matter, seen only through its gravitational influence on regular matter) shape the cosmos and affect its fate.

Scientists will get closer to understanding dark matter by studying its gravitational effects on visible matter. By studying the simulation's 100 million synthetic galaxies, they will see how galaxies and galaxy clusters evolved over eons.

Repeated mock observations of a particular slice of the universe enabled the team to stitch together movies that unveil exploding stars crackling across the synthetic cosmos like fireworks. These starbursts allow scientists to map the expansion of the simulated universe.

Scientists are now using OpenUniverse data as a testbed for creating an alert system to notify astronomers when Roman sees such phenomena. The system will flag these events and track the light they generate so astronomers can study them.

That's critical because Roman will send back far too much data for scientists to comb through themselves. Teams are developing machine-learning algorithms to determine how best to filter through all the data to find and differentiate cosmic phenomena, like various types of exploding stars.

"Most of the difficulty is in figuring out whether what you saw was a special type of supernova that we can use to map how the universe is expanding, or something that is almost identical but useless for that goal," said Alina Kiessling, a research scientist at NASA's Jet Propulsion Laboratory (JPL) in Southern California and the principal investigator of OpenUniverse.

While Euclid is already actively scanning the cosmos, Rubin is set to begin operations late this year and Roman will launch by May 2027. Scientists can use the synthetic images to plan the upcoming telescopes' observations and prepare to handle their data. This prep time is crucial because of the flood of data these telescopes will provide.

In terms of data volume, "Roman is going to blow away everything that's been done from space in infrared and optical wavelengths before," Troxel said. "For one of Roman's surveys, it will take less than a year to do observations that would take the Hubble or James Webb space telescopes around a thousand years. The sheer number of objects Roman will sharply image will be transformative."

"We can expect an incredible array of exciting, potentially Nobel Prize-winning science to stem from Roman's observations," Kiessling said. "The mission will do things like unveil how the universe expanded over time, make 3D maps of galaxies and galaxy clusters, reveal new details about star formation and evolution—all things we simulated. So now we get to practice on the synthetic data so we can get right to the science when real observations begin."

Astronomers will continue using the simulations after Roman launches for a cosmic game of spot the differences. Comparing real observations with synthetic ones will help scientists see how accurately their simulation predicts reality. Any discrepancies could hint at different physics at play in the universe than expected.

"If we see something that doesn't quite agree with the standard model of cosmology, it will be extremely important to confirm that we're really seeing new physics and not just misunderstanding something in the data," said Katrin Heitmann, a cosmologist and deputy director of Argonne's High Energy Physics division who managed the project's supercomputer time. "Simulations are super useful for figuring that out."

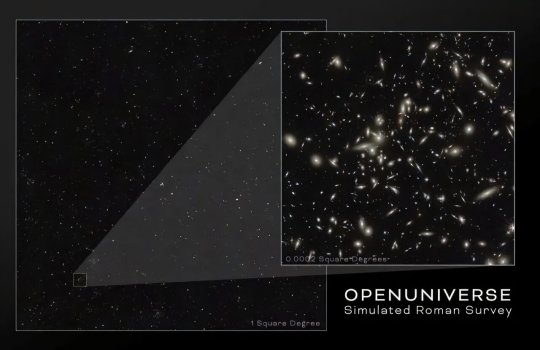

TOP IMAGE: Each tiny dot in the image at left is a galaxy simulated by the OpenUniverse campaign. The one-square-degree image offers a small window into the full simulation area, which is about 70 square degrees (equivalent to an area of sky covered by more than 300 full moons), while the inset at right is a close-up of an area 75 times smaller (1/600th the size of the full area). This simulation showcases the cosmos as NASA's Nancy Grace Roman Space Telescope could see it. Roman will expand on the largest space-based galaxy survey like it—the Hubble Space Telescope's COSMOS survey—which imaged two square degrees of sky over the course of 42 days. In only 250 days, Roman will view more than a thousand times more of the sky with the same resolution. Credit: NASA

6 notes

·

View notes

Text

The AI Revolution: Understanding, Harnessing, and Navigating the Future

What is AI

In a world increasingly shaped by technology, one term stands out above the rest, capturing both our imagination and, at times, our apprehension: Artificial Intelligence. From science fiction dreams to tangible realities, AI is no longer a distant concept but an omnipresent force, subtly (and sometimes not-so-subtly) reshaping industries, transforming daily life, and fundamentally altering our perception of what's possible.

But what exactly is AI? Is it a benevolent helper, a job-stealing machine, or something else entirely? The truth, as always, is far more nuanced. At its core, Artificial Intelligence refers to the simulation of human intelligence processes by machines, especially computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions), and self-correction. What makes modern AI so captivating is its ability to learn from data, identify patterns, and make predictions or decisions with increasing autonomy.

The journey of AI has been a fascinating one, marked by cycles of hype and disillusionment. Early pioneers in the mid-20th century envisioned intelligent machines that could converse and reason. While those early ambitions proved difficult to achieve with the technology of the time, the seeds of AI were sown. The 21st century, however, has witnessed an explosion of progress, fueled by advancements in computing power, the availability of massive datasets, and breakthroughs in machine learning algorithms, particularly deep learning. This has led to the "AI Spring" we are currently experiencing.

The Landscape of AI: More Than Just Robots

When many people think of AI, images of humanoid robots often come to mind. While robotics is certainly a fascinating branch of AI, the field is far broader and more diverse than just mechanical beings. Here are some key areas where AI is making significant strides:

Machine Learning (ML): This is the engine driving much of the current AI revolution. ML algorithms learn from data without being explicitly programmed. Think of recommendation systems on streaming platforms, fraud detection in banking, or personalized advertisements – these are all powered by ML.

Deep Learning (DL): A subset of machine learning inspired by the structure and function of the human brain's neural networks. Deep learning has been instrumental in breakthroughs in image recognition, natural language processing, and speech recognition. The facial recognition on your smartphone or the impressive capabilities of large language models like the one you're currently interacting with are prime examples.

Natural Language Processing (NLP): This field focuses on enabling computers to understand, interpret, and generate human language. From language translation apps to chatbots that provide customer service, NLP is bridging the communication gap between humans and machines.

Computer Vision: This area allows computers to "see" and interpret visual information from the world around them. Autonomous vehicles rely heavily on computer vision to understand their surroundings, while medical imaging analysis uses it to detect diseases.

Robotics: While not all robots are AI-powered, many sophisticated robots leverage AI for navigation, manipulation, and interaction with their environment. From industrial robots in manufacturing to surgical robots assisting doctors, AI is making robots more intelligent and versatile.

AI's Impact: Transforming Industries and Daily Life

The transformative power of AI is evident across virtually every sector. In healthcare, AI is assisting in drug discovery, personalized treatment plans, and early disease detection. In finance, it's used for algorithmic trading, risk assessment, and fraud prevention. The manufacturing industry benefits from AI-powered automation, predictive maintenance, and quality control.

Beyond these traditional industries, AI is woven into the fabric of our daily lives. Virtual assistants like Siri and Google Assistant help us organize our schedules and answer our questions. Spam filters keep our inboxes clean. Navigation apps find the fastest routes. Even the algorithms that curate our social media feeds are a testament to AI's pervasive influence. These applications, while often unseen, are making our lives more convenient, efficient, and connected.

Harnessing the Power: Opportunities and Ethical Considerations

The opportunities presented by AI are immense. It promises to boost productivity, solve complex global challenges like climate change and disease, and unlock new frontiers of creativity and innovation. Businesses that embrace AI can gain a competitive edge, optimize operations, and deliver enhanced customer experiences. Individuals can leverage AI tools to automate repetitive tasks, learn new skills, and augment their own capabilities.

However, with great power comes great responsibility. The rapid advancement of AI also brings forth a host of ethical considerations and potential challenges that demand careful attention.

Job Displacement: One of the most frequently discussed concerns is the potential for AI to automate jobs currently performed by humans. While AI is likely to create new jobs, there will undoubtedly be a shift in the nature of work, requiring reskilling and adaptation.

Bias and Fairness: AI systems learn from the data they are fed. If that data contains historical biases (e.g., related to gender, race, or socioeconomic status), the AI can perpetuate and even amplify those biases in its decisions, leading to unfair outcomes. Ensuring fairness and accountability in AI algorithms is paramount.

Privacy and Security: AI relies heavily on data. The collection and use of vast amounts of personal data raise significant privacy concerns. Moreover, as AI systems become more integrated into critical infrastructure, their security becomes a vital issue.

Transparency and Explainability: Many advanced AI models, particularly deep learning networks, are often referred to as "black boxes" because their decision-making processes are difficult to understand. For critical applications, it's crucial to have transparency and explainability to ensure trust and accountability.

Autonomous Decision-Making: As AI systems become more autonomous, questions arise about who is responsible when an AI makes a mistake or causes harm. The development of ethical guidelines and regulatory frameworks for autonomous AI is an ongoing global discussion.

Navigating the Future: A Human-Centric Approach

Navigating the AI revolution requires a proactive and thoughtful approach. It's not about fearing AI, but rather understanding its capabilities, limitations, and implications. Here are some key principles for moving forward:

Education and Upskilling: Investing in education and training programs that equip individuals with AI literacy and skills in areas like data science, AI ethics, and human-AI collaboration will be crucial for the workforce of the future.

Ethical AI Development: Developers and organizations building AI systems must prioritize ethical considerations from the outset. This includes designing for fairness, transparency, and accountability, and actively mitigating biases.

Robust Governance and Regulation: Governments and international bodies have a vital role to play in developing appropriate regulations and policies that foster innovation while addressing ethical concerns and ensuring the responsible deployment of AI.

Human-AI Collaboration: The future of work is likely to be characterized by collaboration between humans and AI. AI can augment human capabilities, automate mundane tasks, and provide insights, allowing humans to focus on higher-level problem-solving, creativity, and empathy.

Continuous Dialogue: As AI continues to evolve, an ongoing, open dialogue among technologists, ethicists, policymakers, and the public is essential to shape its development in a way that benefits humanity.

The AI revolution is not just a technological shift; it's a societal transformation. By understanding its complexities, embracing its potential, and addressing its challenges with foresight and collaboration, we can harness the power of Artificial Intelligence to build a more prosperous, equitable, and intelligent future for all. The journey has just begun, and the choices we make today will define the world of tomorrow.

2 notes

·

View notes

Text

Unlock the Power of AI: Give Life to Your Videos with Human-Like Voice-Overs

Video has emerged as one of the most effective mediums for audience engagement in the quickly changing field of content creation. Whether you are a business owner, marketer, or YouTuber, producing high-quality videos is crucial. However, what if you could improve your videos even more? Presenting AI voice-overs, the video production industry's future.

It's now simpler than ever to create convincing, human-like voiceovers thanks to developments in artificial intelligence. Your listeners will find it difficult to tell these AI-powered voices apart from authentic human voices since they sound so realistic. However, what is AI voice-over technology really, and why is it important for content creators? Let's get started!

AI Voice-Overs: What Is It? Artificial intelligence voice-overs are produced by machine learning models. In order to replicate the subtleties, tones, and inflections of human speech, these voices are made to seem remarkably natural. Applications for them are numerous and include audiobooks, podcasts, ads, and video narration.

It used to be necessary to hire professional voice actors to create voice-overs for videos, which may be costly and time-consuming. However, voice-overs may now be produced fast without sacrificing quality thanks to AI.

Why Should Your Videos Have AI Voice-Overs? Conserve time and money. Conventional voice acting can be expensive and time-consuming. The costs of scheduling recording sessions, hiring a voice actor, and editing the finished product can mount up rapidly. Conversely, AI voice-overs can be produced in a matter of minutes and at a far lower price.

Regularity and Adaptability You can create consistent audio for all of your videos, regardless of their length or style, by using AI voice-overs. Do you want to alter the tempo or tone? No worries, you may easily change the voice's qualities.

Boost Audience Involvement Your content can become more captivating with a realistic voice-over. Your movies will sound more polished and professional thanks to the more natural-sounding voices produced by AI. Your viewers may have a better overall experience and increase viewer retention as a result.

Support for Multiple Languages Multiple languages and accents can be supported with AI voice-overs, increasing the accessibility of your content for a worldwide audience. AI is capable of producing precise and fluid voice-overs in any language, including English, Spanish, French, and others.

Available at all times AI voice generators are constantly active! You are free to produce as many voiceovers as you require at any one time. This is ideal for expanding the production of content without requiring more human resources.

What Is the Process of AI Voice-Over Technology? Text-to-speech (TTS) algorithms are used in AI voice-over technology to interpret and translate written text into spoken words. Large datasets of human speech are used to train these systems, which then learn linguistic nuances and patterns to produce voices that are more lifelike.

The most sophisticated AI models may even modify the voice according to context, emotion, and tone, producing voice-overs that seem as though they were produced by a skilled human artist.

Where Can AI Voice-Overs Be Used? Videos on YouTube: Ideal for content producers who want to give their work a polished image without investing a lot of time on recording.

Explainers and Tutorials: AI voice-overs can narrate instructional films or tutorials, making your material interesting and easy to understand.

Marketing Videos: Use expert voice-overs for advertisements, product demonstrations, and promotional videos to enhance the marketing content for your brand.

Podcasts: Using AI voice technology, you can produce material that sounds like a podcast, providing your audience with a genuine, human-like experience.

E-learning: AI-generated voices can be included into e-learning modules to provide instructional materials a polished and reliable narration.

Selecting the Best AI Voice-Over Program Numerous AI voice-over tools are available, each with special features. Among the well-liked choices are:

ElevenLabs: renowned for its customizable features and AI voices that seem natural.

HeyGen: Provides highly human-sounding, customisable AI voices, ideal for content producers.

Google Cloud Text-to-Speech: A dependable choice for multilingual, high-quality voice synthesis.

Choose an AI voice-over tool that allows you to customize it, choose from a variety of voices, and change the tone and tempo.

AI Voice-Overs' Prospects in Content Production Voice-overs will only get better as AI technology advances. AI-generated voices could soon be indistinguishable from human voices, giving content producers even more options to improve their work without spending a lot of money on voice actors or spending a lot of time recording.

The future is bright for those who create content. AI voice-overs are a fascinating technology that can enhance the quality of your films, save money, and save time. Using AI voice-overs in your workflow is revolutionary, whether you're making marketing materials, YouTube videos, or online courses.

Are You Interested in AI Voice-Overs? Read my entire post on how AI voice-overs may transform your videos if you're prepared to step up your content production. To help you get started right away, I've also included suggestions for some of the top AI voice-over programs on the market right now.

[Go Here to Read the Complete Article]

#AI Voice Over#YouTube Tips#Content Creation#Voiceover#Video Marketing#animals#birds#black cats#cats of tumblr#fishblr#AI Tools#Digital Marketing

2 notes

·

View notes

Text

How will AI be used in health care settings?

Artificial intelligence (AI) shows tremendous promise for applications in health care. Tools such as machine learning algorithms, artificial neural networks, and generative AI (e.g., Large Language Models) have the potential to aid with tasks such as diagnosis, treatment planning, and resource management. Advocates have suggested that these tools could benefit large numbers of people by increasing access to health care services (especially for populations that are currently underserved), reducing costs, and improving quality of care.

This enthusiasm has driven the burgeoning development and trial application of AI in health care by some of the largest players in the tech industry. To give just two examples, Google Research has been rapidly testing and improving upon its “Med-PaLM” tool, and NVIDIA recently announced a partnership with Hippocratic AI that aims to deploy virtual health care assistants for a variety of tasks to address a current shortfall in the supply in the workforce.

What are some challenges or potential negative consequences to using AI in health care?

Technology adoption can happen rapidly, exponentially going from prototypes used by a small number of researchers to products affecting the lives of millions or even billions of people. Given the significant impact health care system changes could have on Americans’ health as well as on the U.S. economy, it is essential to preemptively identify potential pitfalls before scaleup takes place and carefully consider policy actions that can address them.

One area of concern arises from the recognition that the ultimate impact of AI on health outcomes will be shaped not only by the sophistication of the technological tools themselves but also by external “human factors.” Broadly speaking, human factors could blunt the positive impacts of AI tools in health care—or even introduce unintended, negative consequences—in two ways:

If developers train AI tools with data that don’t sufficiently mirror diversity in the populations in which they will be deployed. Even tools that are effective in the aggregate could create disparate outcomes. For example, if the datasets used to train AI have gaps, they can cause AI to provide responses that are lower quality for some users and situations. This might lead to the tool systematically providing less accurate recommendations for some groups of users or experiencing “catastrophic failures” more frequently for some groups, such as failure to identify symptoms in time for effective treatment or even recommending courses of treatment that could result in harm.

If patterns of AI use systematically differ across groups. There may be an initial skepticism among many potential users to trust AI for consequential decisions that affect their health. Attitudes may differ within the population based on attributes such as age and familiarity with technology, which could affect who uses AI tools, understands and interprets the AI’s output, and adheres to treatment recommendations. Further, people’s impressions of AI health care tools will be shaped over time based on their own experiences and what they learn from others.

In recent research, we used simulation modeling to study a large range of different of hypothetical populations of users and AI health care tool specifications. We found that social conditions such as initial attitudes toward AI tools within a population and how people change their attitudes over time can potentially:

Lead to a modestly accurate AI tool having a negative impact on population health. This can occur because people’s experiences with an AI tool may be filtered through their expectations and then shared with others. For example, if an AI tool’s capabilities are objectively positive—in expectation, the AI won’t give recommendations that are harmful or completely ineffective—but sufficiently lower than expectations, users who are disappointed will lose trust in the tool. This could make them less likely to seek future treatment or adhere to recommendations if they do and lead them to pass along negative perceptions of the tool to friends, family, and others with whom they interact.

Create health disparities even after the introduction of a high-performing and unbiased AI tool (i.e., that performs equally well for all users). Specifically, when there are initial differences between groups within the population in their trust of AI-based health care—for example because of one group’s systematically negative previous experiences with health care or due to the AI tool being poorly communicated to one group—differential use patterns alone can translate into meaningful differences in health patterns across groups. These use patterns can also exacerbate differential effects on health across groups when AI training deficiencies cause a tool to provide better quality recommendations for some users than others.

Barriers to positive health impacts associated with systematic and shifting use patterns are largely beyond individual developers’ direct control but can be overcome with strategically designed policies and practices.

What could a regulatory framework for AI in health care look like?

Disregarding how human factors intersect with AI-powered health care tools can create outcomes that are costly in terms of life, health, and resources. There is also the potential that without careful oversight and forethought, AI tools can maintain or exacerbate existing health disparities or even introduce new ones. Guarding against negative consequences will require specific policies and ongoing, coordinated action that goes beyond the usual scope of individual product development. Based on our research, we suggest that any regulatory framework for AI in health care should accomplish three aims:

Ensure that AI tools are rigorously tested before they are made fully available to the public and are subject to regular scrutiny afterward. Those developing AI tools for use in health care should carefully consider whether the training data are matched to the tasks that the tools will perform and representative of the full population of eventual users. Characteristics of users to consider include (but are certainly not limited to) age, gender, culture, ethnicity, socioeconomic status, education, and language fluency. Policies should encourage and support developers in investing time and resources into pre- and post-launch assessments, including:

pilot tests to assess performance across a wide variety of groups that might experience disparate impact before large-scale application

monitoring whether and to what extent disparate use patterns and outcomes are observed after release

identifying appropriate corrective action if issues are found.

Require that users be clearly informed about what tools can do and what they cannot. Neither health care workers nor patients are likely to have extensive training or sophisticated understanding of the technical underpinnings of AI tools. It will be essential that plain-language use instructions, cautionary warnings, or other features designed to inform appropriate application boundaries are built into tools. Without these features, users’ expectations of AI capabilities might be inaccurate, with negative effects on health outcomes. For example, a recent report outlines how overreliance on AI tools by inexperienced mushroom foragers has led to cases of poisoning; it is easy to imagine how this might be a harbinger of patients misdiagnosing themselves with health care tools that are made publicly available and missing critical treatment or advocating for treatment that is contraindicated. Similarly, tools used by health care professionals should be supported by rigorous use protocols. Although advanced tools will likely provide accurate guidance an overwhelming majority of the time, they can also experience catastrophic failures (such as those referred to as “hallucinations” in the AI field), so it is critical for trained human users to be in the loop when making key decisions.

Proactively protect against medical misinformation. False or misleading claims about health and health care—whether the result of ignorance or malicious intent—have proliferated in digital spaces and become harder for the average person to distinguish from reliable information. This type of misinformation about health care AI tools presents a serious threat, potentially leading to mistrust or misapplication of these tools. To discourage misinformation, guardrails should be put in place to ensure consistent transparency about what data are used and how that continuous verification of training data accuracy takes place.

How can regulation of AI in health care keep pace with rapidly changing conditions?

In addition to developers of tools themselves, there are important opportunities for unaffiliated researchers to study the impact of AI health care tools as they are introduced and recommend adjustments to any regulatory framework. Two examples of what this work might contribute are:

Social scientists can learn more about how people think about and engage with AI tools, as well as how perceptions and behaviors change over time. Rigorous data collection and qualitative and quantitative analyses can shed light on these questions, improving understanding of how individuals, communities, and society adapt to shifts in the health care landscape.

Systems scientists can consider the co-evolution of AI tools and human behavior over time. Building on or tangential to recent research, systems science can be used to explore the complex interactions that determine how multiple health care AI tools deployed across diverse settings might affect long-term health trends. Using longitudinal data collected as AI tools come into widespread use, prospective simulation models can provide timely guidance on how policies might need to be course corrected.

6 notes

·

View notes

Text

The Future of AI: What’s Next in Machine Learning and Deep Learning?

Artificial Intelligence (AI) has rapidly evolved over the past decade, transforming industries and redefining the way businesses operate. With machine learning and deep learning at the core of AI advancements, the future holds groundbreaking innovations that will further revolutionize technology. As machine learning and deep learning continue to advance, they will unlock new opportunities across various industries, from healthcare and finance to cybersecurity and automation. In this blog, we explore the upcoming trends and what lies ahead in the world of machine learning and deep learning.

1. Advancements in Explainable AI (XAI)

As AI models become more complex, understanding their decision-making process remains a challenge. Explainable AI (XAI) aims to make machine learning and deep learning models more transparent and interpretable. Businesses and regulators are pushing for AI systems that provide clear justifications for their outputs, ensuring ethical AI adoption across industries. The growing demand for fairness and accountability in AI-driven decisions is accelerating research into interpretable AI, helping users trust and effectively utilize AI-powered tools.

2. AI-Powered Automation in IT and Business Processes

AI-driven automation is set to revolutionize business operations by minimizing human intervention. Machine learning and deep learning algorithms can predict and automate tasks in various sectors, from IT infrastructure management to customer service and finance. This shift will increase efficiency, reduce costs, and improve decision-making. Businesses that adopt AI-powered automation will gain a competitive advantage by streamlining workflows and enhancing productivity through machine learning and deep learning capabilities.

3. Neural Network Enhancements and Next-Gen Deep Learning Models

Deep learning models are becoming more sophisticated, with innovations like transformer models (e.g., GPT-4, BERT) pushing the boundaries of natural language processing (NLP). The next wave of machine learning and deep learning will focus on improving efficiency, reducing computation costs, and enhancing real-time AI applications. Advancements in neural networks will also lead to better image and speech recognition systems, making AI more accessible and functional in everyday life.

4. AI in Edge Computing for Faster and Smarter Processing

With the rise of IoT and real-time processing needs, AI is shifting toward edge computing. This allows machine learning and deep learning models to process data locally, reducing latency and dependency on cloud services. Industries like healthcare, autonomous vehicles, and smart cities will greatly benefit from edge AI integration. The fusion of edge computing with machine learning and deep learning will enable faster decision-making and improved efficiency in critical applications like medical diagnostics and predictive maintenance.

5. Ethical AI and Bias Mitigation

AI systems are prone to biases due to data limitations and model training inefficiencies. The future of machine learning and deep learning will prioritize ethical AI frameworks to mitigate bias and ensure fairness. Companies and researchers are working towards AI models that are more inclusive and free from discriminatory outputs. Ethical AI development will involve strategies like diverse dataset curation, bias auditing, and transparent AI decision-making processes to build trust in AI-powered systems.

6. Quantum AI: The Next Frontier

Quantum computing is set to revolutionize AI by enabling faster and more powerful computations. Quantum AI will significantly accelerate machine learning and deep learning processes, optimizing complex problem-solving and large-scale simulations beyond the capabilities of classical computing. As quantum AI continues to evolve, it will open new doors for solving problems that were previously considered unsolvable due to computational constraints.

7. AI-Generated Content and Creative Applications

From AI-generated art and music to automated content creation, AI is making strides in the creative industry. Generative AI models like DALL-E and ChatGPT are paving the way for more sophisticated and human-like AI creativity. The future of machine learning and deep learning will push the boundaries of AI-driven content creation, enabling businesses to leverage AI for personalized marketing, video editing, and even storytelling.

8. AI in Cybersecurity: Real-Time Threat Detection

As cyber threats evolve, AI-powered cybersecurity solutions are becoming essential. Machine learning and deep learning models can analyze and predict security vulnerabilities, detecting threats in real time. The future of AI in cybersecurity lies in its ability to autonomously defend against sophisticated cyberattacks. AI-powered security systems will continuously learn from emerging threats, adapting and strengthening defense mechanisms to ensure data privacy and protection.

9. The Role of AI in Personalized Healthcare

One of the most impactful applications of machine learning and deep learning is in healthcare. AI-driven diagnostics, predictive analytics, and drug discovery are transforming patient care. AI models can analyze medical images, detect anomalies, and provide early disease detection, improving treatment outcomes. The integration of machine learning and deep learning in healthcare will enable personalized treatment plans and faster drug development, ultimately saving lives.

10. AI and the Future of Autonomous Systems

From self-driving cars to intelligent robotics, machine learning and deep learning are at the forefront of autonomous technology. The evolution of AI-powered autonomous systems will improve safety, efficiency, and decision-making capabilities. As AI continues to advance, we can expect self-learning robots, smarter logistics systems, and fully automated industrial processes that enhance productivity across various domains.

Conclusion

The future of AI, machine learning and deep learning is brimming with possibilities. From enhancing automation to enabling ethical and explainable AI, the next phase of AI development will drive unprecedented innovation. Businesses and tech leaders must stay ahead of these trends to leverage AI's full potential. With continued advancements in machine learning and deep learning, AI will become more intelligent, efficient, and accessible, shaping the digital world like never before.

Are you ready for the AI-driven future? Stay updated with the latest AI trends and explore how these advancements can shape your business!

#artificial intelligence#machine learning#techinnovation#tech#technology#web developers#ai#web#deep learning#Information and technology#IT#ai future

2 notes

·

View notes

Text

AI Frameworks Help Data Scientists For GenAI Survival

AI Frameworks: Crucial to the Success of GenAI

Develop Your AI Capabilities Now

You play a crucial part in the quickly growing field of generative artificial intelligence (GenAI) as a data scientist. Your proficiency in data analysis, modeling, and interpretation is still essential, even though platforms like Hugging Face and LangChain are at the forefront of AI research.

Although GenAI systems are capable of producing remarkable outcomes, they still mostly depend on clear, organized data and perceptive interpretation areas in which data scientists are highly skilled. You can direct GenAI models to produce more precise, useful predictions by applying your in-depth knowledge of data and statistical techniques. In order to ensure that GenAI systems are based on strong, data-driven foundations and can realize their full potential, your job as a data scientist is crucial. Here’s how to take the lead:

Data Quality Is Crucial

The effectiveness of even the most sophisticated GenAI models depends on the quality of the data they use. By guaranteeing that the data is relevant, AI tools like Pandas and Modin enable you to clean, preprocess, and manipulate large datasets.

Analysis and Interpretation of Exploratory Data

It is essential to comprehend the features and trends of the data before creating the models. Data and model outputs are visualized via a variety of data science frameworks, like Matplotlib and Seaborn, which aid developers in comprehending the data, selecting features, and interpreting the models.

Model Optimization and Evaluation

A variety of algorithms for model construction are offered by AI frameworks like scikit-learn, PyTorch, and TensorFlow. To improve models and their performance, they provide a range of techniques for cross-validation, hyperparameter optimization, and performance evaluation.

Model Deployment and Integration

Tools such as ONNX Runtime and MLflow help with cross-platform deployment and experimentation tracking. By guaranteeing that the models continue to function successfully in production, this helps the developers oversee their projects from start to finish.

Intel’s Optimized AI Frameworks and Tools

The technologies that developers are already familiar with in data analytics, machine learning, and deep learning (such as Modin, NumPy, scikit-learn, and PyTorch) can be used. For the many phases of the AI process, such as data preparation, model training, inference, and deployment, Intel has optimized the current AI tools and AI frameworks, which are based on a single, open, multiarchitecture, multivendor software platform called oneAPI programming model.

Data Engineering and Model Development:

To speed up end-to-end data science pipelines on Intel architecture, use Intel’s AI Tools, which include Python tools and frameworks like Modin, Intel Optimization for TensorFlow Optimizations, PyTorch Optimizations, IntelExtension for Scikit-learn, and XGBoost.

Optimization and Deployment

For CPU or GPU deployment, Intel Neural Compressor speeds up deep learning inference and minimizes model size. Models are optimized and deployed across several hardware platforms including Intel CPUs using the OpenVINO toolbox.

You may improve the performance of your Intel hardware platforms with the aid of these AI tools.

Library of Resources

Discover collection of excellent, professionally created, and thoughtfully selected resources that are centered on the core data science competencies that developers need. Exploring machine and deep learning AI frameworks.

What you will discover:

Use Modin to expedite the extract, transform, and load (ETL) process for enormous DataFrames and analyze massive datasets.

To improve speed on Intel hardware, use Intel’s optimized AI frameworks (such as Intel Optimization for XGBoost, Intel Extension for Scikit-learn, Intel Optimization for PyTorch, and Intel Optimization for TensorFlow).

Use Intel-optimized software on the most recent Intel platforms to implement and deploy AI workloads on Intel Tiber AI Cloud.

How to Begin

Frameworks for Data Engineering and Machine Learning

Step 1: View the Modin, Intel Extension for Scikit-learn, and Intel Optimization for XGBoost videos and read the introductory papers.

Modin: To achieve a quicker turnaround time overall, the video explains when to utilize Modin and how to apply Modin and Pandas judiciously. A quick start guide for Modin is also available for more in-depth information.

Scikit-learn Intel Extension: This tutorial gives you an overview of the extension, walks you through the code step-by-step, and explains how utilizing it might improve performance. A movie on accelerating silhouette machine learning techniques, PCA, and K-means clustering is also available.

Intel Optimization for XGBoost: This straightforward tutorial explains Intel Optimization for XGBoost and how to use Intel optimizations to enhance training and inference performance.

Step 2: Use Intel Tiber AI Cloud to create and develop machine learning workloads.

On Intel Tiber AI Cloud, this tutorial runs machine learning workloads with Modin, scikit-learn, and XGBoost.

Step 3: Use Modin and scikit-learn to create an end-to-end machine learning process using census data.

Run an end-to-end machine learning task using 1970–2010 US census data with this code sample. The code sample uses the Intel Extension for Scikit-learn module to analyze exploratory data using ridge regression and the Intel Distribution of Modin.

Deep Learning Frameworks

Step 4: Begin by watching the videos and reading the introduction papers for Intel’s PyTorch and TensorFlow optimizations.

Intel PyTorch Optimizations: Read the article to learn how to use the Intel Extension for PyTorch to accelerate your workloads for inference and training. Additionally, a brief video demonstrates how to use the addon to run PyTorch inference on an Intel Data Center GPU Flex Series.

Intel’s TensorFlow Optimizations: The article and video provide an overview of the Intel Extension for TensorFlow and demonstrate how to utilize it to accelerate your AI tasks.

Step 5: Use TensorFlow and PyTorch for AI on the Intel Tiber AI Cloud.

In this article, it show how to use PyTorch and TensorFlow on Intel Tiber AI Cloud to create and execute complicated AI workloads.

Step 6: Speed up LSTM text creation with Intel Extension for TensorFlow.

The Intel Extension for TensorFlow can speed up LSTM model training for text production.

Step 7: Use PyTorch and DialoGPT to create an interactive chat-generation model.

Discover how to use Hugging Face’s pretrained DialoGPT model to create an interactive chat model and how to use the Intel Extension for PyTorch to dynamically quantize the model.

Read more on Govindhtech.com

#AI#AIFrameworks#DataScientists#GenAI#PyTorch#GenAISurvival#TensorFlow#CPU#GPU#IntelTiberAICloud#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

The Skills I Acquired on My Path to Becoming a Data Scientist

Data science has emerged as one of the most sought-after fields in recent years, and my journey into this exciting discipline has been nothing short of transformative. As someone with a deep curiosity for extracting insights from data, I was naturally drawn to the world of data science. In this blog post, I will share the skills I acquired on my path to becoming a data scientist, highlighting the importance of a diverse skill set in this field.

The Foundation — Mathematics and Statistics

At the core of data science lies a strong foundation in mathematics and statistics. Concepts such as probability, linear algebra, and statistical inference form the building blocks of data analysis and modeling. Understanding these principles is crucial for making informed decisions and drawing meaningful conclusions from data. Throughout my learning journey, I immersed myself in these mathematical concepts, applying them to real-world problems and honing my analytical skills.

Programming Proficiency

Proficiency in programming languages like Python or R is indispensable for a data scientist. These languages provide the tools and frameworks necessary for data manipulation, analysis, and modeling. I embarked on a journey to learn these languages, starting with the basics and gradually advancing to more complex concepts. Writing efficient and elegant code became second nature to me, enabling me to tackle large datasets and build sophisticated models.

Data Handling and Preprocessing

Working with real-world data is often messy and requires careful handling and preprocessing. This involves techniques such as data cleaning, transformation, and feature engineering. I gained valuable experience in navigating the intricacies of data preprocessing, learning how to deal with missing values, outliers, and inconsistent data formats. These skills allowed me to extract valuable insights from raw data and lay the groundwork for subsequent analysis.

Data Visualization and Communication

Data visualization plays a pivotal role in conveying insights to stakeholders and decision-makers. I realized the power of effective visualizations in telling compelling stories and making complex information accessible. I explored various tools and libraries, such as Matplotlib and Tableau, to create visually appealing and informative visualizations. Sharing these visualizations with others enhanced my ability to communicate data-driven insights effectively.

Machine Learning and Predictive Modeling

Machine learning is a cornerstone of data science, enabling us to build predictive models and make data-driven predictions. I delved into the realm of supervised and unsupervised learning, exploring algorithms such as linear regression, decision trees, and clustering techniques. Through hands-on projects, I gained practical experience in building models, fine-tuning their parameters, and evaluating their performance.

Database Management and SQL

Data science often involves working with large datasets stored in databases. Understanding database management and SQL (Structured Query Language) is essential for extracting valuable information from these repositories. I embarked on a journey to learn SQL, mastering the art of querying databases, joining tables, and aggregating data. These skills allowed me to harness the power of databases and efficiently retrieve the data required for analysis.

Domain Knowledge and Specialization

While technical skills are crucial, domain knowledge adds a unique dimension to data science projects. By specializing in specific industries or domains, data scientists can better understand the context and nuances of the problems they are solving. I explored various domains and acquired specialized knowledge, whether it be healthcare, finance, or marketing. This expertise complemented my technical skills, enabling me to provide insights that were not only data-driven but also tailored to the specific industry.

Soft Skills — Communication and Problem-Solving

In addition to technical skills, soft skills play a vital role in the success of a data scientist. Effective communication allows us to articulate complex ideas and findings to non-technical stakeholders, bridging the gap between data science and business. Problem-solving skills help us navigate challenges and find innovative solutions in a rapidly evolving field. Throughout my journey, I honed these skills, collaborating with teams, presenting findings, and adapting my approach to different audiences.

Continuous Learning and Adaptation

Data science is a field that is constantly evolving, with new tools, technologies, and trends emerging regularly. To stay at the forefront of this ever-changing landscape, continuous learning is essential. I dedicated myself to staying updated by following industry blogs, attending conferences, and participating in courses. This commitment to lifelong learning allowed me to adapt to new challenges, acquire new skills, and remain competitive in the field.

In conclusion, the journey to becoming a data scientist is an exciting and dynamic one, requiring a diverse set of skills. From mathematics and programming to data handling and communication, each skill plays a crucial role in unlocking the potential of data. Aspiring data scientists should embrace this multidimensional nature of the field and embark on their own learning journey. If you want to learn more about Data science, I highly recommend that you contact ACTE Technologies because they offer Data Science courses and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested. By acquiring these skills and continuously adapting to new developments, they can make a meaningful impact in the world of data science.

#data science#data visualization#education#information#technology#machine learning#database#sql#predictive analytics#r programming#python#big data#statistics

14 notes

·

View notes

Text

How AI is Revolutionizing Digital Marketing Tools in 2024

wanna know How AI is Revolutionizing Digital Marketing Tools in 2024 look no futher . in this blog i have outlined the perfect way to help you know the insights of ai revolutionizing digital marketing tools in 2024

introduction

The digital marketing landscape is evolving at a breakneck pace, and artificial intelligence (AI) is at the forefront of this transformation. As we step into 2024, AI-powered tools are revolutionizing how businesses approach digital marketing, offering unprecedented levels of efficiency, personalization, and insight. In this article, we'll explore how AI is reshaping digital marketing tools and why incorporating these advanced technologies is essential for staying competitive.

The Rise of AI in Digital Marketing tools in 2024

AI has become an integral part of digital marketing strategies, with 80% of industry experts incorporating some form of AI technology in their marketing activities by the end of 2023. This trend is only expected to grow as AI tools become more sophisticated and accessible.

here's the top must have digital marketing tools in 2024

Stats & Facts:

Adoption Rate: By 2023, 80% of industry experts were using AI technology in their marketing activities (Source: Forbes).

Market Growth: The global AI in the marketing market is expected to grow from $12 billion in 2022 to $35 billion by 2025 (Source: MarketsandMarkets).

Enhancing Personalization

One of the most significant impacts of AI on digital marketing is its ability to deliver highly personalized experiences. AI algorithms analyze vast amounts of data to understand consumer behavior, preferences, and trends. This allows marketers to create tailored content, recommendations, and offers for individual users.

Example: E-commerce giants like Amazon and Netflix leverage AI to provide personalized product recommendations and content suggestions, resulting in higher engagement and conversion rates. According to a study by McKinsey, companies that excel in personalization generate 40% more revenue from those activities than average players.

Stats & Facts:

Revenue Increase: Companies that excel in personalization generate 40% more revenue than those that don't (Source: McKinsey).

Consumer Preference: 80% of consumers are more likely to make a purchase when brands offer personalized experiences (Source: Epsilon).

Improving Customer Insights

AI-powered analytics tools are transforming how businesses gather and interpret customer data. These tools can process and analyze large datasets in real-time, providing deep insights into customer behavior, sentiment, and preferences.

Example: Tools like Google Analytics 4 use AI to offer predictive metrics, such as potential revenue and churn probability. This helps businesses make informed decisions and refine their marketing strategies.

Stats & Facts:

Predictive Analytics: Companies that use predictive analytics are 2.9 times more likely to report revenue growth rates higher than the industry average

Data Processing: AI can analyze data up to 60 times faster than humans

Automating Routine Tasks

Automation is another area where AI is making a significant impact. AI-driven automation tools handle repetitive tasks, freeing up marketers to focus on more strategic activities.

Example: Email marketing platforms like Mailchimp use AI to automate email campaign scheduling, segmentation, and even content creation. This results in more efficient campaigns and improved ROI. In fact, automated email marketing can generate up to 320% more revenue than non-automated campaigns.

Stats & Facts:

Revenue Boost: Automated email marketing can generate up to 320% more revenue than non-automated campaigns

Time Savings: AI can reduce the time spent on routine tasks by up to 50%

Enhancing Customer Service with Chatbots

AI-powered chatbots are revolutionizing customer service by providing instant, 24/7 support. These chatbots can handle a wide range of queries, from product information to troubleshooting, without human intervention.

Example: Companies like Sephora use AI chatbots to assist customers with product recommendations and booking appointments. According to a report by Gartner, by 2024, AI-driven chatbots will handle 85% of customer interactions without human agents.

Stats & Facts:

Interaction Handling: By 2024, AI-driven chatbots will handle 85% of customer interactions without human agents

Cost Savings: Businesses can save up to 30% in customer support costs by using chatbots

Boosting Content Creation and Optimization

AI is also transforming content creation and optimization. AI tools can generate high-quality content, suggest improvements, and even predict how content will perform.

“Aspiring to create top-notch content become a leader in the industry?

well here's the price drop alert for high quality content masterly course from digital scholar to enhance your branding viral on the social media, google better rankings

Example: Tools like Copy.ai and Writesonic use AI to create blog posts, social media content, and ad copy. Additionally, platforms like MarketMuse analyze content and provide optimization recommendations to improve search engine rankings. According to HubSpot, businesses that use AI for content marketing see a 50% increase in engagement.

Stats & Facts:

Engagement Increase: Businesses using AI for content marketing see a 50% increase in engagement

Content Generation: AI can generate content up to 10 times faster than humans

Enhancing Ad Targeting and Performance

AI-driven advertising platforms are changing the way businesses target and engage with their audiences. These tools use machine learning algorithms to analyze user data and optimize ad placements, ensuring that ads reach the right people at the right time.

Example: Facebook's AI-powered ad platform uses advanced algorithms to target users based on their behavior, interests, and demographics. This results in higher click-through rates (CTR) and lower cost-per-click (CPC). A study by WordStream found that AI-optimized ads can achieve up to 50% higher CTRs compared to non-optimized ads.

Stats & Facts:

CTR Increase: AI-optimized ads can achieve up to 50% higher click-through rates

Cost Efficiency: AI-driven ad platforms can reduce cost-per-click by up to 30%

Predictive Analytics for Better Decision-Making

Predictive analytics powered by AI enables marketers to forecast trends, customer behavior, and campaign outcomes. This allows for proactive decision-making and more effective strategy development.

Example: Platforms like IBM Watson Marketing use AI to predict customer behavior and provide actionable insights. This helps businesses tailor their marketing efforts to meet future demands. According to a report by Forrester, companies that use predictive analytics are 2.9 times more likely to report revenue growth rates higher than the industry average.

Stats & Facts:

Revenue Growth: Companies using predictive analytics are 2.9 times more likely to report higher revenue growth rates

Accuracy Improvement: AI can improve the accuracy of marketing forecasts by up to 70%

Enhancing Social Media Management

AI tools are revolutionizing social media management by automating content scheduling, analyzing engagement metrics, and even generating content ideas.

Example: Tools like Hootsuite and Sprout Social use AI to analyze social media trends and suggest optimal posting times. They also provide sentiment analysis to help businesses understand how their audience feels about their brand. According to Social Media Today, AI-powered social media tools can increase engagement by up to 20%.

Stats & Facts:

Engagement Boost: AI-powered social media tools can increase engagement by up to 20%

Efficiency Gains: AI can reduce the time spent on social media management by up to 30%

The Future of AI in Digital Marketing tools

here's the top must have digital marketing tools in 2024

As we look ahead, the role of AI in digital marketing will only continue to expand. Emerging technologies like natural language processing (NLP), computer vision, and advanced machine learning models will further enhance AI's capabilities.

Example: AI-powered voice search optimization tools will become increasingly important as more consumers use voice assistants like Siri and Alexa for online searches. By 2024, voice searches are expected to account for 50% of all online searches.

Stats & Facts:

Voice Search Growth: By 2024, voice searches are expected to account for 50% of all online searches

NLP Advancements: The global NLP market is projected to reach $43 billion by 2025

Conclusion